Amazon Web Services (AWS) is an IaaS, commonly known as Infrastructure-as-a-Service, that is responsible for creating a huge gateway for cloud computing. This platform further specializes in services and organizational tools that range from content delivery services to cloud storage, and so on.

But when it comes to creating cloud-ready applications, then there are a ton of things that you need to cater to, in order to ensure a smooth flow of elements and functions within the application itself. Let’s dive into the basic explanation of what’s cloud-ready and how is it different from the traditional cloud-native method.

Cloud-Ready Architecture vs. Cloud-Native

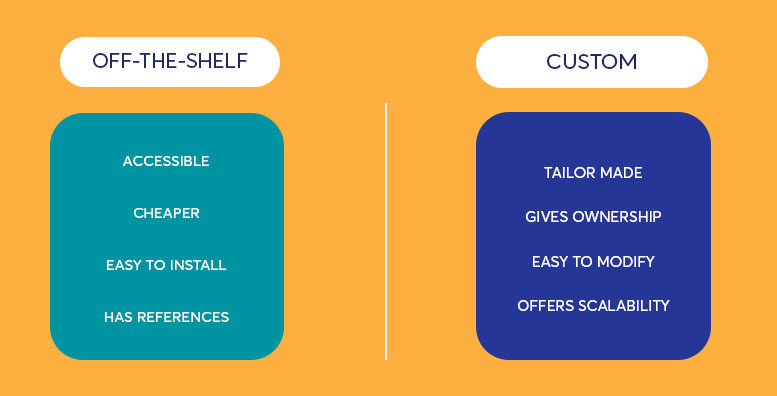

Cloud-native and Cloud-ready architecture may be branches of the same field, but they are polar opposite setups. Cloud-Native applications were originally designed for container-based deployment for the public cloud, and they use agile software development to get things done.

Cloud-ready architecture, on the other hand, is a transformed classic enterprise application that is made to function on the cloud. They may not be able to utilize all the functions that the public cloud has to offer, but there are a significant number of productive assets that we can create and use from this transformed architecture.

However, when creating cloud applications, there are certain aspects you need to integrate and look out for in an AWS well-architected framework, to create a solid foundation that holds onto all the integral functions of the applications and caters to all the requirements of cloud-ready application architecture in AWS.

The AWS well-architected framework is designed on the 5-pillar model that ensures not only smooth transitioning but also lives up to the expectations of the client with timely and stable deliverables. Those AWS five pillars are as follows:

- Design and operational excellence of AWS well-architected framework

The AWS architecture best practices start from the operational excellence which includes the key objectives of your business goals and how the organization can effectively work around them to gain insight, provide the best solutions and bring value to the business altogether. The design principles are categorized as follows:

- Use of codes on all mediums of the workloads (infrastructure, applications, etc) to maintain autonomy and limit human error as much as possible.

- Create flexibility by updating small changes and upgrades to the system which can be reversible without any damage.

- Evolve and upgrade your systems by refining the functions and procedures now and then. Set days to effectively work around and improve the system with your team to familiarize them with the changes.

- Anticipate, trigger, identify and solve all the potential failures by diving in deep and conducting frequent testings and understanding the impact it creates, and familiarizing your team with it as well.

- Share all the necessary trial and error outcomes with your team and engage them in all the learnings that you deciphered during necessary operational procedures.

- Consistent and reliable performance (workloads)

It is necessary to maintain a smooth performance while building cloud infrastructure on AWS well-architected framework. Maintaining performance efficiency will lead to smooth transitioning in demand and technology, without creating any disruption of any sort and simultaneously ticking all the right boxes. To maintain the flow, a few of the best cloud designing practices are followed, they are as follows:

- Utilize advanced technologies as services that your team can incorporate in your projects, by delegating their setup to the cloud vendors and including them in your cloud application.

- Go global by distributing your workload amongst numerous AWS regions to bring down the delay rate and make things quick at a fraction of a price.

- Discard physical servers and use wireless modern techniques like cloud technologies for service operations and reduce the transactional cost of physical servers by restricting them to traditional computing activities.

- Broaden up your horizon and dive into experiments with different configurations and more.

- Follow the mindset and approach that you deem fit for your goals and achievements.

- Reliable architecture

It is necessary to encompass a reliable and effective architecture on AWS that enables a consistent workflow throughout the functionality of the application. There are several principles that one needs to look into while building cloud applications on AWS. They are as follows:

- The system should enable an automatic recovery whenever a threshold is breached. With an effective automation process, the application can anticipate and conduct a remedy of the supposed failure before it affects the system.

- A test run on all the procedures is necessary, which will help fix multiple failures before they happen in real-time.

- Reduce failure on overall workload by placing a large resource over multiple smaller ones. Scale horizontally to reduce any distribution of failures.

- Monitor your service capacity based on your workload without “assuming” anything, as it is one of the common factors of on-premises failures.

- Conduct any changes via automation, to track and review them throughout the process.

- Security aspect

Security has become a crucial aspect for applications to think of, especially cloud-based applications. This security pillar helps create a safe and secure environment for the application, keeping all the data, assets, and crucial information safe from all ends. There are a few factors that one must follow to maintain a secure platform while building cloud infrastructure architecture.

- Create a loop and traceability amongst the application and track activities in real-time.

- Application of security and verification on all aspects and layers of the application.

- Enforce strict authorization on all levels to interact with AWS resources.

- Categorize data into security levels and limit access where necessary with high-level encryption.

- Eliminate direct access to data with effective tools to reduce misuse of data.

- Conduct a drill to test emergency security features and automatic responses, and prepare for the right responses accordingly.

- Cost optimization

Cost Optimization is a crucial part of cloud-ready applications, mainly because it allows you to not only achieve the services at the lowest price point but also help predict the amount that will be spent in the future. It will also keep a tab on the necessary expansion and its expenses once the business takes off for good.

Cost optimization is impossible without following a certain set of pillars, as stated below:

- Invest time and money in cloud financial management to learn more about it.

- Pay only for services that you use, and calculate the time that it takes on an average per day to further slash the cost.

- Calculate the workload from the associated cost, and compare the data to increase the output and further cut down on things with little to no output, to increase functionality.

- Allow AWS to cater to the heavy-lifting, and do not spend on unnecessary items that are not your forte, like IT infrastructure and all.

- Swiftly analyze the expenses and compare them to the collective and individual usage, workload and help optimize it to increase ROI.

Final Thoughts

With our thorough description of the AWS well-architected framework, you can easily build a cloud-ready application architecture on Amazon Web Services. The 5 pillars of operating a reliable, secure, and super cost-effective system will ensure a streamlined application construction, maintain a smooth workflow, and help create a well-groomed cloud-ready application architecture.